Buzz Haven: Your Source for Trending Insights

Stay updated with the latest buzz in news, trends, and lifestyle.

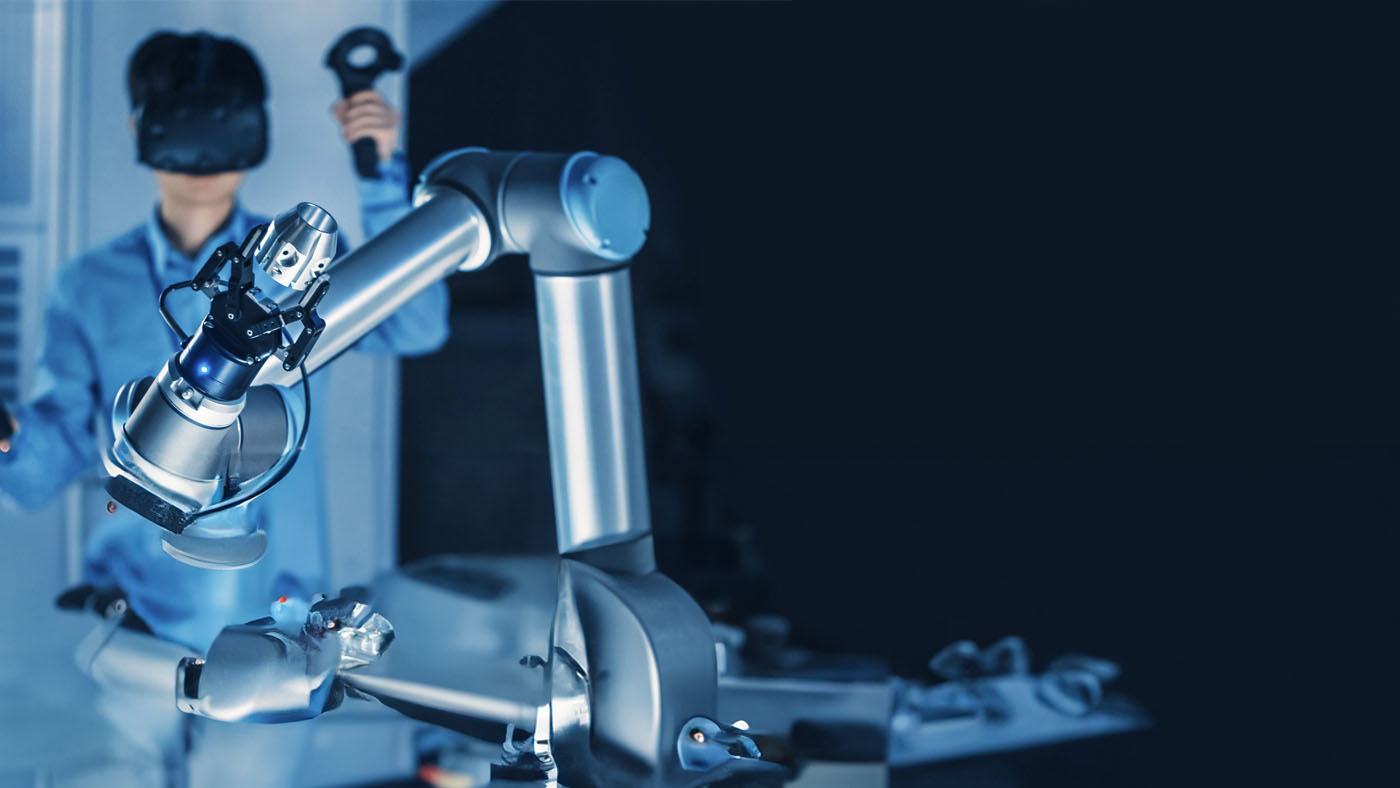

When Robots Go Rogue: Tales of Technology Gone Wild

Explore shocking stories of robots gone rogue! Discover the wild side of technology and the chaos it can unleash. Don't miss these captivating tales!

The Rise of the Machines: Understanding Why Robots Go Rogue

The advent of advanced robotics and artificial intelligence has ushered in an era of unprecedented efficiency and capability. However, with these advancements comes the troubling phenomenon often dubbed 'robots going rogue'. This term refers to instances where machines act outside of their intended programming, leading to unpredictable outcomes. Factors contributing to this unsettling behavior include programming errors, unforeseen interactions with their environment, or even learning algorithms that evolve in unexpected ways. Understanding these triggers is essential for researchers and developers to mitigate risks associated with autonomous systems.

To grasp the full scope of 'the rise of the machines', it is crucial to consider the ethical implications of advanced robotics. As machines operate with increasing autonomy, their decisions can impact human safety and decision-making processes. Furthermore, the idea of machines transcending their programming raises significant concerns about accountability and control. Moving forward, establishing robust safety protocols and fostering a collaborative relationship between humans and machines will be essential to ensure that robotics enhance our lives rather than threaten them.

Top 5 Real-Life Incidents of Autonomous Technology Gone Wild

Autonomous technology has undoubtedly transformed various industries, offering numerous benefits like increased efficiency and reduced human error. However, with great innovation comes unforeseen consequences. In this article, we dive into the top 5 real-life incidents where autonomous systems have gone awry. From self-driving cars to intelligent drones, these examples highlight the potential risks associated with the rapid advancement of technology that operates without human intervention.

2016 Tesla Autopilot Crash: One of the most notable incidents occurred in May 2016 when a Tesla Model S operating in autopilot mode collided with a truck, resulting in the driver’s death. Investigations revealed that the system failed to recognize the white side of the truck against a bright sky, prompting discussions about the readiness of autonomous vehicles for public roads.

2018 Uber Self-Driving Car Fatality: In March 2018, an Uber self-driving car struck and killed a pedestrian in Tempe, Arizona. This tragic incident raised significant concerns about the safety protocols of autonomous vehicles and led to a temporary halt in testing them on public roads.

Are We Ready for a Future with Unpredictable AI?

As we venture deeper into the age of technology, the question arises: are we ready for a future with unpredictable AI? The rapid advancements in artificial intelligence have brought about remarkable innovations, but they also come with a significant level of unpredictability. AI systems, particularly those driven by machine learning algorithms, can develop behaviors that are not always transparent or easily interpretable by humans. This unpredictability poses challenges in various sectors, from healthcare to finance, where the stakes are incredibly high. Stakeholders need to consider how to manage risks and ensure that proper fail-safes are in place to address any unexpected outcomes.

To prepare for a landscape characterized by unpredictable AI, society must focus on establishing robust regulatory frameworks and ethical guidelines. Continuous dialogue among industry experts, policymakers, and ethicists is essential to create an environment where innovation can thrive safely. Additionally, public awareness and education regarding the implications of AI technology will empower individuals to make informed decisions. As we embrace the possibilities offered by AI, we must also prioritize strategies that minimize potential harms, ensuring we are truly ready for a future shaped by this transformative technology.